At the core of InSnap's revolutionary video content lies an advanced neural network architecture that brings digital characters to life with uncanny realism. The system utilizes a proprietary blend of generative adversarial networks (GANs) and transformer models to craft facial expressions that mirror human nuance down to the subtlest micro-gestures. What sets InSnap apart is its emotion-mapping algorithm that analyzes thousands of human performance recordings to create authentic emotional responses. This technology doesn't just animate faces - it breathes genuine personality into digital beings, allowing them to convey complex emotions through slight eyebrow movements, lip quirks, and eye dilation that most viewers can't consciously identify but instinctively recognize as real.

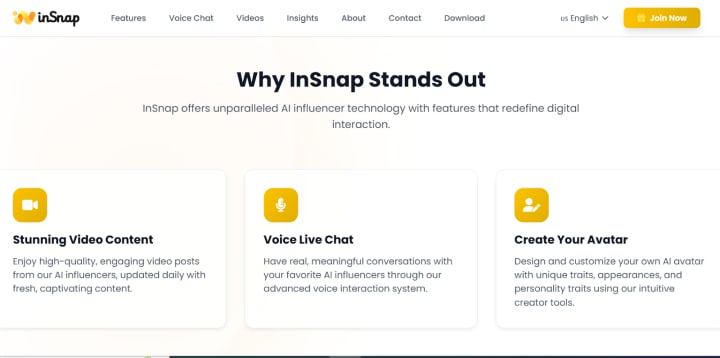

Next-Generation Voice Synthesis Technology

InsnapAI voice technology goes far beyond standard text-to-speech systems. Their multi-layered vocal synthesis combines phoneme-level precision with emotional intonation algorithms that adapt based on content context. The system first deconstructs speech into hundreds of acoustic parameters, then reconstructs it with perfect clarity and natural rhythm. A separate emotional intelligence layer analyzes the semantic meaning of scripts to apply appropriate vocal stress, pacing, and tone variations. The result is voice output that carries the warmth and imperfections of human speech, complete with natural breaths, slight stumbles, and emotional inflections that make the AI characters sound genuinely alive rather than artificially perfect.

The Physics Engine Behind Natural Movement

What truly sells InSnap's video realism is the sophisticated physics simulation that governs body language and movement. The platform's proprietary biomechanical model replicates human musculoskeletal constraints, ensuring digital characters move with proper weight distribution and joint limitations. Hair and clothing respond realistically to motion through complex particle system simulations that calculate individual strand and fabric behavior. Even environmental interactions like shadows and reflections are rendered with physically accurate light transport algorithms. This attention to physical detail prevents the "uncanny valley" effect, allowing viewers to suspend disbelief and engage with the content as they would with human performers.

Real-Time Rendering Breakthroughs

InSnap's engineering team has overcome traditional rendering limitations through a revolutionary hybrid approach combining neural rendering with traditional computer graphics. Their deep learning renderer predicts light behavior using neural networks trained on millions of real-world lighting scenarios, dramatically reducing computation time while maintaining photorealistic quality. The system can generate high-fidelity frames at 60fps by intelligently prioritizing rendering resources to areas of viewer focus. This real-time capability enables interactive applications where users can engage with AI characters in dynamic conversations with instant visual feedback, opening new possibilities for live streaming and personalized video content.

Context-Aware Content Generation

Perhaps the most impressive aspect of InSnap's technology is its semantic understanding capability. The system doesn't just string together pre-made assets - it comprehends narrative context to generate appropriate visuals. A script analysis module identifies emotional arcs, pacing, and thematic elements to guide scene composition, camera movements, and character performances. This context awareness allows for coherent long-form content generation where characters maintain consistent personalities and storylines develop naturally. The technology can even adapt content style based on target demographics, automatically adjusting everything from color palettes to editing rhythm to maximize engagement with specific audiences.

The Future of AI-Generated Video

InSnap is already pioneering the next wave of video technology with experimental features like emotion-responsive content that adapts in real-time to viewer reactions captured through webcam analysis. Their research team is working on multi-character interaction systems where AI performers improvise scenes together based on loose guidelines. Looking further ahead, the company envisions a future where entire personalized movies can be generated on-demand, with viewers able to customize everything from plot directions to visual styles. As the technology continues advancing, InSnap is positioned to redefine not just influencer content, but the entire landscape of digital media production.

Democratizing High-End Video Production

Beyond its technical achievements, InSnap's true innovation lies in making Hollywood-quality production accessible to anyone. The platform's user-friendly interface masks the immense complexity underneath, allowing creators to generate professional content with simple text prompts or voice commands. This democratization is disrupting traditional video production pipelines, eliminating the need for expensive equipment, crews, and post-production facilities. Small businesses can now create product videos that rival major brand campaigns, while individual creators can produce content that stands alongside studio productions - all powered by AI that handles the technical heavy lifting while humans focus on creative direction.

Comments